Classes | |

| class | CGmmState |

Functions | |

| ToolGmm (V, K, numMaxIter=1000, prevState=None) | |

| helper function: gaussian mixture model | |

| updateGaussians_I (V, p, state) | |

| computeProb_I (V, state) | |

| initState_I (V, K) | |

Function Documentation

◆ computeProb_I()

| computeProb_I | ( | V, | |

| state ) |

Definition at line 72 of file ToolGmm.py.

72def computeProb_I(V, state):

73

74 K = state.mu.shape[1]

75 p = np.zeros([V.shape[1], K])

76

77 # for each cluster

78 for k in range(K):

79 # subtract mean

80 Vm = V - state.mu[:, [k]]

81

82 # weighted gaussian

83 p[:, k] = 1 / np.sqrt((2*np.pi)**Vm.shape[0] * np.linalg.det(state.sigma[k, :, :])) * np.exp(-.5 * np.sum(np.multiply(Vm, np.matmul(np.linalg.inv(state.sigma[k, :, :]), Vm)), axis=0).T)

84 p[:, k] = state.prior[k] * p[:, k]

85

86 # norm over clusters

87 p = p / np.tile(np.sum(p, axis=1, keepdims=True), (1, K))

88

89 return p

90

91

Here is the caller graph for this function:

◆ initState_I()

| initState_I | ( | V, | |

| K ) |

Definition at line 92 of file ToolGmm.py.

92def initState_I(V, K):

93

94 prior = np.ones(K) / K

95

96 # pick random points as cluster means

97 mIdx = np.round(np.random.rand(K) * (V.shape[1]-1)).astype(int)

98

99 # assign means etc.

100 mu = V[:, mIdx]

101 s = np.cov(V)

102 sigma = np.zeros([K, V.shape[0], V.shape[0]])

103 for k in range(K):

104 sigma[k, :, :] = s

105

106 # write initial state

107 state = CGmmState(mu, sigma, prior)

108

109 return state

Here is the caller graph for this function:

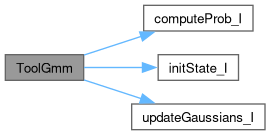

◆ ToolGmm()

| ToolGmm | ( | V, | |

| K, | |||

| numMaxIter = 1000, | |||

| prevState = None ) |

helper function: gaussian mixture model

- Parameters

-

V features for all train observations (dimension iNumFeatures x iNumObservations) K number of gaussians numMaxIter maximum number of iterations (stop if not converged before, default: 1000) prevState internal state that can be stored to continue clustering later

- Returns

- mu: means (iNumFeatures x K)

- sigma: standard deviations (K x iNumFeatures X iNumFeatures)

- state: result containing internal state (if needed)

Definition at line 23 of file ToolGmm.py.

23def ToolGmm(V, K, numMaxIter=1000, prevState=None):

24

25 # init

26 if prevState is None:

27 state = initState_I(V, K)

28 else:

29 state = CGmmState(prevState.mu.copy(), prevState.sigma.copy(), prevState.prior.copy())

30

31 for j in range(numMaxIter):

32 prevState = CGmmState(state.mu.copy(), state.sigma.copy(), state.prior.copy())

33

34 # compute weighted gaussian

35 p = computeProb_I(V, state)

36

37 # update clusters

38 state = updateGaussians_I(V, p, state)

39

40 # if converged, break

41 if np.max(np.sum(np.abs(state.mu-prevState.mu))) <= 1e-20:

42 break

43

44 return state.mu, state.sigma, state

45

46

Here is the call graph for this function:

◆ updateGaussians_I()

| updateGaussians_I | ( | V, | |

| p, | |||

| state ) |

Definition at line 47 of file ToolGmm.py.

47def updateGaussians_I(V, p, state):

48

49 # number of clusters

50 K = state.mu.shape[1]

51

52 # update priors

53 state.prior = np.mean(p, axis=0)

54

55 for k in range(K):

56 s = 0

57

58 # update means

59 state.mu[:, k] = np.matmul(V, p[:, k]) / np.sum(p[:, k])

60

61 # subtract mean

62 Vm = V - state.mu[:, [k]]

63

64 for n in range(V.shape[1]):

65 s = s + p[n, k] * np.matmul(Vm[:, [n]], Vm[:, [n]].T)

66

67 state.sigma[k, :, :] = s / np.sum(p[:, k])

68

69 return state

70

71

Here is the caller graph for this function: